Shipping Hibernation in 3 days

Learn how quickly we built a Hibernation feature in Kubernetes using Prometheus, KEDA, and Cloudflare Workers to save costs on non-production apps.

Our goal

We've long wanted to introduce a feature that enables our customers to manage non-production applications in a cost-effective manner. By putting these applications to sleep when they're not in use, we can reduce resource consumption and help our users save costs. The result is a win-win: our customers are happier, and we're able to provide them with a more affordable solution.

Brief overview of our architecture

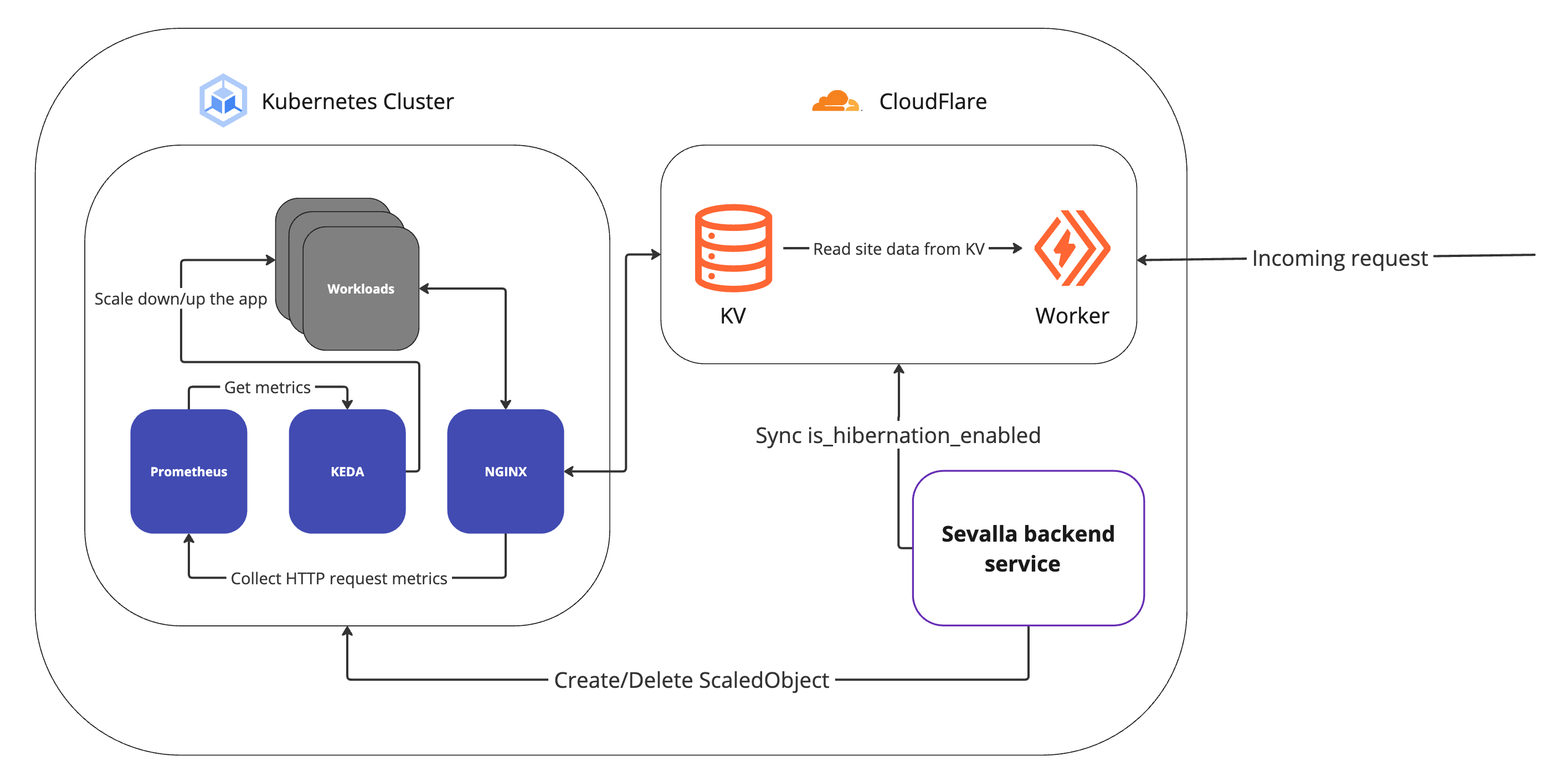

Our customers' workloads are powered by Kubernetes, providing a scalable and robust way to manage them. We deploy Prometheus in our clusters to serve real-time analytics on the Sevalla dashboard. Each cluster is also equipped with an NGINX Ingress controller, which manages incoming HTTP requests. To enhance security, we have a tight Cloudflare integration, ensuring that every cluster benefits from Cloudflare's DDoS protection. Leveraging our Cloudflare integration, we also deploy a custom Cloudflare Worker that reads data from our Cloudflare KV storage. It allows us to handle requests more dynamically and efficiently.

The challenge

To address our goal, we had to look for a solution that could automatically scale resources based on HTTP request volumes. Fortunately, we quickly discovered KEDA, a Kubernetes-based Event-Driven Autoscaler, which proved to be an ideal fit for our needs. KEDA enables the scaling of any container in Kubernetes in response to event volumes from sources such as Kafka, RabbitMQ, or Prometheus. It was a perfect match, and we were thrilled to find a solution that met our requirements so seamlessly. With KEDA, the solution became surprisingly straightforward.

Leveraging KEDA for Hibernation control

We integrated KEDA with our NGINX request counts, which are tracked by Prometheus. By applying a ScaledObject, KEDA dynamically adjusts the number of replicas for an application, enabling or disabling hibernation mode.

Here's a step-by-step overview of our implementation:

-

Prometheus and NGINX Integration: Prometheus collects metrics from NGINX ingress, tracking request counts and other relevant data.

-

KEDA configuration: We installed KEDA on our clusters to read these metrics. We configured a ScaledObject that listens to the NGINX request count metric from Prometheus. When the count drops below a certain threshold, the application is scaled down to zero, entering hibernation mode.

-

Waking up applications: When traffic resumes, KEDA automatically scales the application back up based on the metrics, effectively "waking up" the app.

It was this simple! Here's an example of the ScaledObject configuration:

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: myapp-hibernation

namespace: myapp

spec:

scaleTargetRef:

kind: Deployment

name: myapp

pollingInterval: 5

cooldownPeriod: 300

minReplicaCount: 0

maxReplicaCount: 1

advanced:

restoreToOriginalReplicaCount: true

triggers:

- type: prometheus

metadata:

serverAddress: http://prometheus-api.prometheus.svc.cluster.local:9090

metricName: access_frequency

threshold: '1'

query: |

sum(rate(nginx_ingress_controller_requests{exported_namespace="myapp"}[1m]))Handling requests during wake-up

To ensure a smooth user experience, requests that arrive while an application is hibernating are handled gracefully by our Cloudflare Worker keeping these requests in a pending state.

Here’s a brief overview of how this works:

-

When a user enables hibernation via the Sevalla dashboard, we set a flag,

is_hibernation_enabled, in the Cloudflare KV store for the relevant application's key-value pairs. -

The Cloudflare Worker checks this flag to decide whether to delay incoming requests. If an application is waking up (indicated by a

503response) andis_hibernation_enabledis true, the worker defers the request until the application is fully ready to serve traffic.

This approach ensures that requests are not lost or rejected during the transition from hibernation to an active state, providing a seamless experience for end users.

Architecture overview

This image illustrates the complete architecture of our Hibernation feature.

Day-by-day recap

Day 1: Planning and creating a PoC

We discovered KEDA and began integrating it into our staging cluster. By the end of the day, we had a working proof of concept (PoC) that scaled our application up and down based on NGINX request counts.

Day 2: Implementing hibernation mode

Our focus was on handling requests during the wake-up phase. We chose to use Cloudflare Workers to defer incoming requests while the application was hibernating. At the same time, we began rolling out our infrastructure changes to production and deploying the new worker code.

Day 3: Final rollout

We completed the production rollout and implemented the required endpoints and frontend components for our dashboard. In just three days, we had a fully functional hibernation feature that was reducing costs for our customers. 🤩

Conclusion

Delivering the Hibernation feature so quickly was truly a team effort, and we're proud of what we achieved in such a short time.

This feature marks another step forward in our ongoing mission to provide powerful, user-friendly tools for managing applications in the cloud. As we continue to innovate, we're excited to see how our customers will use Hibernation to streamline their workflows and reduce costs.